I have implemented procedural city generation on OpenGL and in this blog post, I will explain my implementation process and discuss my results.

My initial plan was to implement the following features:

- Procedural grid rendering using Wang Tiles and noise sampling.

- Building generation using L system grammars and level-of-detail rendering.

- Greenery generation using L system grammars and noise sampling.

In the sections below, I will explain my implementation process for each feature in detail.

Grid Generation

The purpose of generating a grid was to determine the contents of each tile in a manner that would look natural. To achieve this, my plan was to sample from a noise function to determine a few initial tiles, and then generate the rest of the grid by using Wang tiles with appropriate constraints or, if that produced unacceptable results, use Wave Function Collapse to generate the rest of the grid seamlessly.

I initially planned on using Perlin noise through an existing library called ‘stb_perlin.h’. However, its perlin_noise3() function only generated values with very small absolute values (below 0.1 in most cases) for some reason, so I decided to implement the function myself. My first attempt was to utilize the function I used for my third homework in this course. For some reason, it produced a checker-like pattern. The video below shows a 10 by 10 grid that was generated by sampling from my Perlin noise function. To visualize the contents initially, I simply rendered some pictures for each main tile type (grasses for greenery, a straight road for the roads, and a parking lot for the buildings)

While looking for some resources on the technical details of Perlin noise, I came across this video. I decided to reimplement using this video as a guide, and my new function provided much better results:

For this implementation, I used 2D Perlin noise with the following gradient vectors:

const glm::vec2 gradients[8] = {

glm::vec2(1, 0),

glm::vec2(-1, 0),

glm::vec2(0, 1),

glm::vec2(0, -1),

glm::vec2(1, 1),

glm::vec2(-1, 1),

glm::vec2(1, -1),

glm::vec2(-1, -1)

};

The interpolation function for this implementation is different from the one on the course slides. Instead of using -6|x|5+15|x|4-10|x|3+1, it uses 6x5-15x4+10x3. Other than that, the implementation is mostly similar to the one on our course slides:

int hash(int x, int y) {

return (((x % 256) + y) % 256) % 8;

}

float fade(float t) {

return t * t * t * (t * (t * 6 - 15) + 10);

}

float lerp(float a, float b, float t) {

return a + t * (b - a);

}

float dotGridGradient(int ix, int iy, float x, float y) {

glm::vec2 gradient = gradients[hash(ix, iy)];

float dx = x - (float)ix;

float dy = y - (float)iy;

return (dx * gradient.x + dy * gradient.y);

}

float perlin(float x, float y) {

// Determine grid cell coordinates

int x0 = (int)floor(x);

int x1 = x0 + 1;

int y0 = (int)floor(y);

int y1 = y0 + 1;

// Determine interpolation weights

float sx = fade(x - (float)x0);

float sy = fade(y - (float)y0);

// Interpolate between grid point gradients

float n0, n1, ix0, ix1, value;

n0 = dotGridGradient(x0, y0, x, y);

n1 = dotGridGradient(x1, y0, x, y);

ix0 = lerp(n0, n1, sx);

n0 = dotGridGradient(x0, y1, x, y);

n1 = dotGridGradient(x1, y1, x, y);

ix1 = lerp(n0, n1, sx);

value = lerp(ix0, ix1, sy);

//return (value + 1) / 2;

return abs(value);

}To map the values between 0 and 1 I tried both (n(x,y)+1)/2 and |n(x,y)|. From the tests I’ve done, the second option seemed to yield better results, but this mostly depends on how one maps the tile values to the noise values.

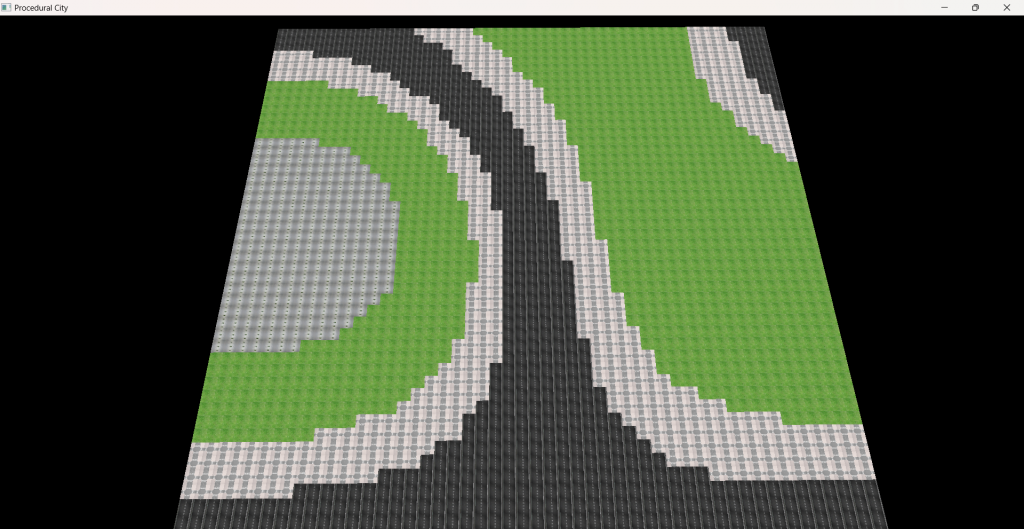

Once I increased the grid size and changed the mapping a bit, I got the following pattern:

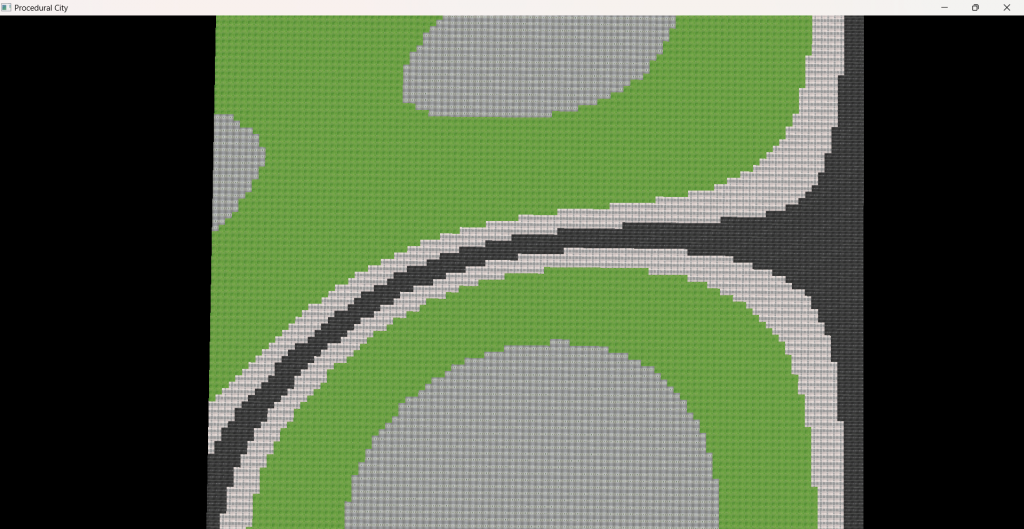

The black tiles represent roads, the green tiles represent the greenery, and the greyish tiles that are clumped together represent the building tiles. This produced quite a nice pattern in my opinion. So I increased the size to 100 by 100 and tweaked the mapping just a bit more to produce this pattern:

This pattern looks quite nice in my opinion. The building blocks are grouped together, which should lead to more buildings being generated near each other with greenery tiles between building clusters. I should mention that I used the gradient vectors without random indexing or creating random gradient vectors, so the Perlin noise map is constant. This is fine though, as my plan is to just sample some initial tiles and then start generating from those tiles according to their constraints, leading to varying but consistent patterns. At least, that was my plan.

Once that was done, I tried to add some constraints and generate the tiles according to them. Below are two 25 by 25 grids where the first one was created with 5 samples and the second one was created with 25 samples:

While more samples lead to more variance, the overall pattern looked quite randomized. The reason behind this is my constraints being inadequate. I tried to improve the pattern by adding more tile types and different constraints further on but I couldn’t achieve a good looking pattern.

Tree Generation

To generate trees, I utilized an L system grammar. I was initially also going to sample from a noise function to determine the positions of trees within a tile, but since the tiles were already quite small and the buildings were going to be scaled according to the tiles, I decided to render one tree per greenery tile, and determine the type of tree at that tile by sampling from the mapped Perlin noise values.

The L system I used for the trees works as follows:

Forward (‘F’):

- Calculate the next position by moving forward.

- Add a cylinder segment.

- Update the current position.

Rotate Right (‘+’):

- Rotate by 25 degrees around the Z-axis in a positive direction.

Rotate Left (‘-‘):

- Rotate by 25 degrees around the Z-axis in a negative direction.

Push State (‘[‘):

- Push the current transformation matrix onto the stack.

- Push the current position onto the stack.

- Push the current thickness (as a scale matrix) onto the stack.

Pop State (‘]’):

- Pop the thickness from the stack and update thickness.

- Pop the current position from the stack and update current position.

- Pop the current transformation matrix from the stack and update the current matrix.

Below is the code for this system:

void parseLSystem(const std::string& lSystemString, std::vector<glm::vec3>& vertices, const glm::vec3& startPosition) {

std::stack<glm::mat4> stack;

glm::mat4 currentMatrix = glm::translate(glm::mat4(1.0f), startPosition);

glm::vec3 currentPosition = startPosition;

glm::vec3 forwardVector(0.0f, 0.5f, 0.0f); // Determines the length over a direction

float initialThickness = 0.09f;

float thickness = initialThickness;

for (char c : lSystemString) {

if (c == 'F') {

glm::vec3 nextPosition = currentPosition + glm::vec3(currentMatrix * glm::vec4(forwardVector, 0.0f));

addCylinderSegment(vertices, currentPosition, nextPosition, thickness);

currentPosition = nextPosition;

}

else if (c == '+') {

currentMatrix = glm::rotate(currentMatrix, glm::radians(25.0f), glm::vec3(0.0f, 0.0f, 1.0f));

}

else if (c == '-') {

currentMatrix = glm::rotate(currentMatrix, glm::radians(-25.0f), glm::vec3(0.0f, 0.0f, 1.0f));

}

else if (c == '[') {

stack.push(currentMatrix);

stack.push(glm::translate(glm::mat4(1.0f), currentPosition));

stack.push(glm::scale(glm::mat4(1.0f), glm::vec3(thickness)));

}

else if (c == ']') {

thickness = glm::scale(stack.top(), glm::vec3(1.0f, 1.0f, 1.0f))[0][0];

stack.pop();

currentPosition = glm::vec3(stack.top()[3]);

stack.pop();

currentMatrix = stack.top();

stack.pop();

}

thickness *= 0.8f; // Reduce the thickness for the next segment

}

}By using this system, I generated 6 different tree structures with different strings. Below are the outputs of each string.

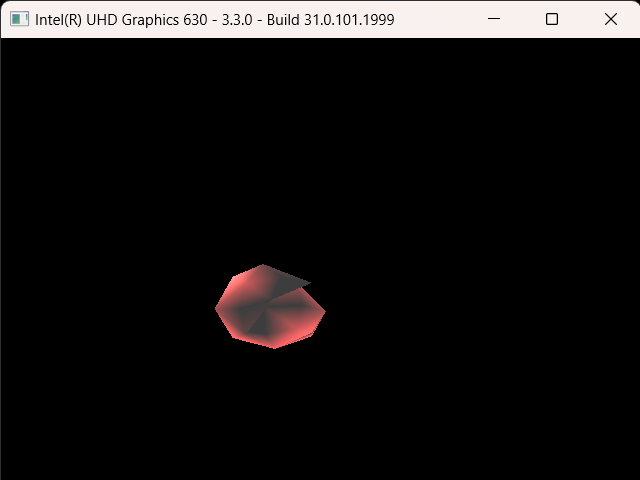

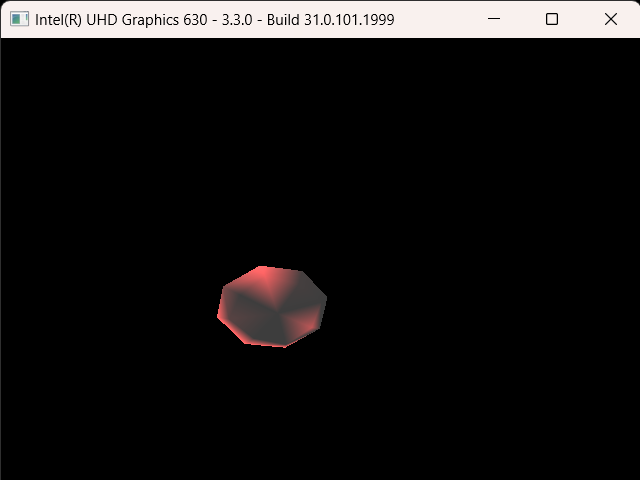

Basic branching tree (“F[+F]F[-F]F”):

Dense branching tree (“F[+F[+F]F[-F]]F[-F[+F][-F]F]”):

Somewhat symmetrical tree (“F[+F[+F]F[-F]]F[-F[+F]F]F[+F]F[-F]”):

Wide spread tree: (“F[+F[+F[+F]F[-F]]F[-F[+F]F[-F]]]F[-F[+F]F[-F[+F]F[-F]]]F[+F]F[-F]”):

High complexity tree (“F[+F[+F[+F]F[-F]]F[-F[+F]F[-F]]]F[-F[+F[+F]F[-F]]F[-F[+F]F[-F]]]F[+F[+F[+F]F[-F]]F[-F[+F]F[-F]]]F[-F]”):

Bifurcating tree (“F[+F[+F]F[-F]]F[-F[+F]F[-F[+F]F[-F]]]F[+F[+F]F[-F]]”):

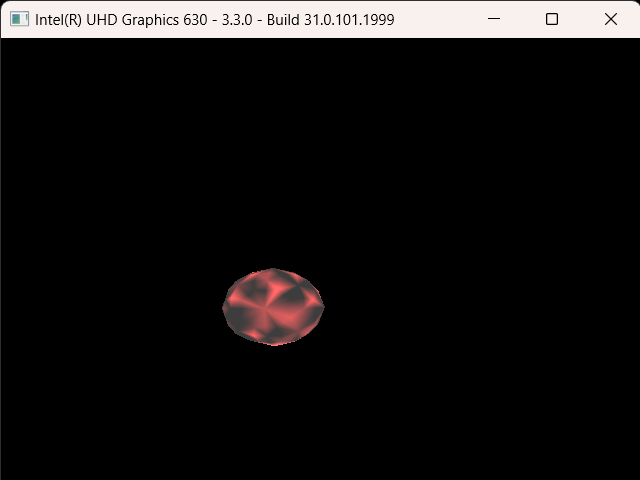

And below is trees being generated on a 25 by 25 grid:

I was going to implement a function to generate several random tree strings according to the L system rules, but I didn’t have enough time to implement it (I couldn’t even render some leaves for the trees).

Building Generation

To generate the buildings, I again used L system grammars. The building grammar is quite simple:

Wall (‘F’):

- Add a wall segment from current position to next position.

- Rotate by -90 degrees for the next segment.

Window (‘W’):

- Similar to ‘F’, but adds a window segment instead of a wall.

Door (‘D’):

- Similar to ‘F’, but adds a door segment instead of a wall.

Roof (‘R’):

- Add a roof from the current position.

Move Up (‘+’):

- Move upwards by height. Basically creates a new floor.

And here’s the code for the system:

void parseBuildingLSystem(const std::string& lSystemString, std::vector<glm::vec3>& vertices, const glm::vec3& startPosition) {

std::stack<glm::mat4> stack;

glm::mat4 currentMatrix = glm::translate(glm::mat4(1.0f), startPosition);

glm::vec3 currentPosition = startPosition;

glm::vec3 forwardVector(1.0f, 0.0f, 0.0f); // Forward vector for building segments

for (char c : lSystemString) {

if (c == 'F') {

glm::vec3 nextPosition = currentPosition + glm::vec3(currentMatrix * glm::vec4(forwardVector, 0.0f));

addBuildingSegment(vertices, currentPosition, nextPosition, WALL);

currentPosition = nextPosition;

currentMatrix = glm::rotate(currentMatrix, glm::radians(-90.0f), glm::vec3(0.0f, 1.0f, 0.0f));

}

else if (c == 'W') {

glm::vec3 nextPosition = currentPosition + glm::vec3(currentMatrix * glm::vec4(forwardVector, 0.0f));

addBuildingSegment(vertices, currentPosition, nextPosition, WINDOW);

currentPosition = nextPosition;

currentMatrix = glm::rotate(currentMatrix, glm::radians(-90.0f), glm::vec3(0.0f, 1.0f, 0.0f));

}

else if (c == 'D') {

glm::vec3 nextPosition = currentPosition + glm::vec3(currentMatrix * glm::vec4(forwardVector, 0.0f));

addBuildingSegment(vertices, currentPosition, nextPosition, DOOR);

currentPosition = nextPosition;

currentMatrix = glm::rotate(currentMatrix, glm::radians(-90.0f), glm::vec3(0.0f, 1.0f, 0.0f));

}

else if (c == 'R') {

glm::vec3 nextPosition = currentPosition + glm::vec3(currentMatrix * glm::vec4(forwardVector, 0.0f));

addBuildingSegment(vertices, currentPosition, nextPosition, ROOF);

}

else if (c == '+') {

currentPosition += glm::vec3(0.0f, 1.0f, 0.0f);

}

}

}This system builds very simple buildings with randomized walls that either have a window or not. The ground floor also has a wall with a door in it. The video below showcases a simple building with a door, two windows, and a wall:

For the buildings, at least, I managed to implement a string generator that randomly generates building strings up to a given height (might be shorter). My implementation randomly generates 5 strings and renders the building from those models. Below is the implementation for it:

std::unordered_map> buildingRules = {

{‘F’, {‘F’, ‘W’}},

{‘W’, {‘F’, ‘W’}},

{‘D’, {‘F’, ‘W’}},

{‘+’, {‘F’, ‘W’, ‘R’}}

};

std::string generateBuildingString(int maxHeight = 3) {

std::string buildingString = “D”;

char lastChar = ‘D’;

int wallCount = 1, currentHeight = 0, constraintCount;

//std::cout << "Entered function\n";

while (currentHeight < maxHeight) {

//std::cout << "Current string: " << buildingString << "\n";

constraintCount = buildingRules[lastChar].size();

char newChar = buildingRules[lastChar][rand() % constraintCount];

if (newChar == 'F' || newChar == 'W' || newChar == 'D') {

wallCount++;

}

buildingString += newChar;

if (newChar == 'R') break;

if (wallCount >= 4) {

buildingString += '+';

wallCount = 0;

currentHeight++;

lastChar = '+';

}

else {

lastChar = newChar;

}

}

return buildingString;}

Unfortunately, I didn’t have much time to write a complex system, I barely had time to create the meshes for the walls and doors and windows.

This basically sums up my implementation. Here’s my final runtime video:

Final Discussions

Unfortunately, my implementation is admittedly very shoddy. I was originally supposed to implement this on Vulkan, but I just couldn’t grasp how Vulkan worked and just wasted most of my time. This is terrible time management on my part, as I’m sure I could have implemented something more concrete with interesting results. I could have tried randomizing the Perlin noise gradients and compare grids generated by Wang tile implementation and pure noise sampling. The trees could be rendered with more complex systems to generate some interesting models. Similarly, buildings could also be rendered in much more varying structure and actual texture instead of plain grey. Moreover, because the buildings had such simple meshes, I couldn’t really simplify the models further for LOD rendering. I even had to design the road textures by hand, yet some of them are rendered in red and I have no idea why. Still, I feel like I’ve learned quite a bit and I’d like to further develop this project in my spare time.