For the first homework of my CENG 795: Advanced Ray Tracing course, I implemented a simple ray tracer in C++ that reads scene data from a JSON file and renders images using basic ray tracing methods. My ray tracer performs ambient, diffuse, and specular shading calculations for intersected objects, as well as shadow calculations when the light source is obstructed by another object. Additionally, it performs reflection calculations for mirror, conductor, and dielectric objects, and unfortunately, it incorrectly calculates refraction for dielectric objects. In this post I will explain my implementation process, the errors I came across, and the resulting images with their rendering time. I will also provide some explanations that might be redundant. This is totally for the sake of future visitors who might not be familiar with ray tracing and absolutely not to increase the length of this post.

Parsing and Storing the Scene Data

According to the homework specifications, I was required to parse both JSON and PLY files for scene data. I used Niels Lohmann’s JSON library to parse the scene information and stored it using a set of custom structs. Unfortunately, I wasn’t able to implement PLY parsing in time, so my ray tracer currently only works with JSON files.

All scene-related data is contained within a single Scene struct, which includes several other structs corresponding to each field in the JSON file. While the homework document already explains each field, I’ll briefly summarize them here for completeness.

Below is the Scene struct and a brief description of each field:

struct Scene

{

//Data

Vec3i background_color;

float shadow_ray_epsilon;

float intersection_test_epsilon;

int max_recursion_depth;

std::vector<Camera> cameras;

Vec3f ambient_light;

std::vector<PointLight> point_lights;

std::vector<Material> materials;

std::vector<Vec3f> vertex_data;

std::vector<Mesh> meshes;

std::vector<Triangle> triangles;

std::vector<Sphere> spheres;

std::vector<Plane> planes;

//Functions

void loadFromJSON(std::string filepath);

};

- background_color: A vector of integers containing the RGB value of the scene background.

- shadow_ray_epsilon: A small float value used to offset the intersection points in order to prevent self-intersection.

- intersection_test_epsilon: A small float value that was unspecified in the homework documentation. I initially assumed it was supposed to be used to offset the intersection points while calculating reflections. This has caused quite a bit of headache for me while implementing reflection calculation.

- max_recursion_depth: The maximum amount of times a ray’s bounce will be calculated when reflected.

- cameras: An array of Camera objects, each containing information about the camera vectors and the image plane.

- ambient_light: A vector of floats defining how much light an object receives even in shadow.

- point_lights: An array of PointLight objects, each containing a vector of floats for position and a vector of floats for intensity.

- materials: An array of Material objects, each containing the type of material and the necessary values for shading.

- vertex_data: An array of float vectors, each containing the position of the vertex in the given index.

- meshes: An array of mesh objects, each containing the shading type, the index of the material, and an array of Face objects.

- triangles: An array of Triangle objects, each containing the indices of its vertices, the index of its material, and a float vector representing its normal.

- spheres: An array of Sphere objects, each containing the index of its material, the index of its center vertex, and its radius.

- planes: An array of Plane objects, each containing the index of its material, the index of its point vertex, and a float vector representing its normal.

- loadFromJSON: The function to parse and store the scene data.

Ray Calculation and Object Intersection

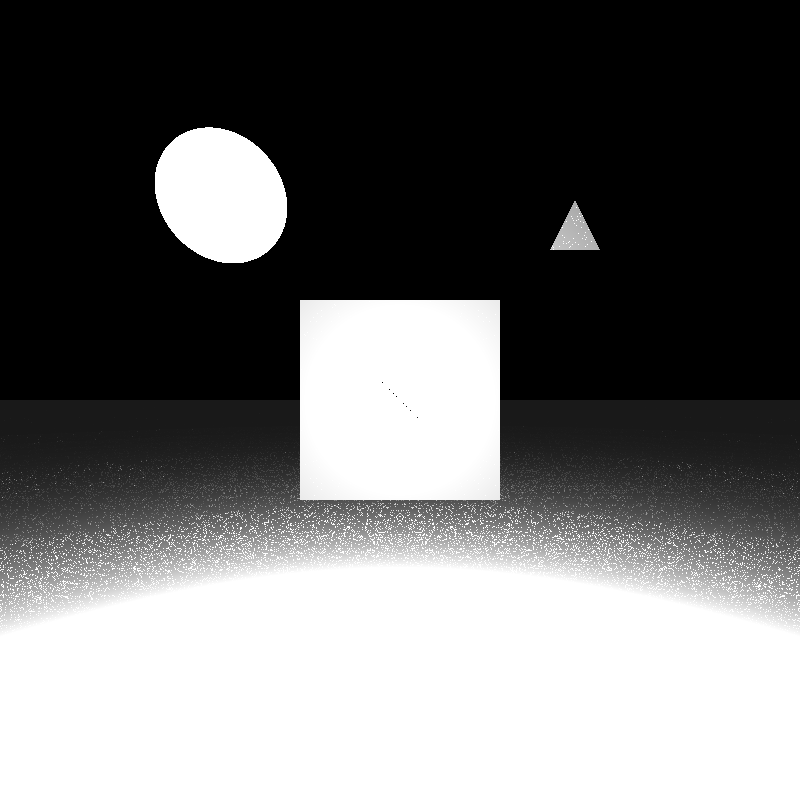

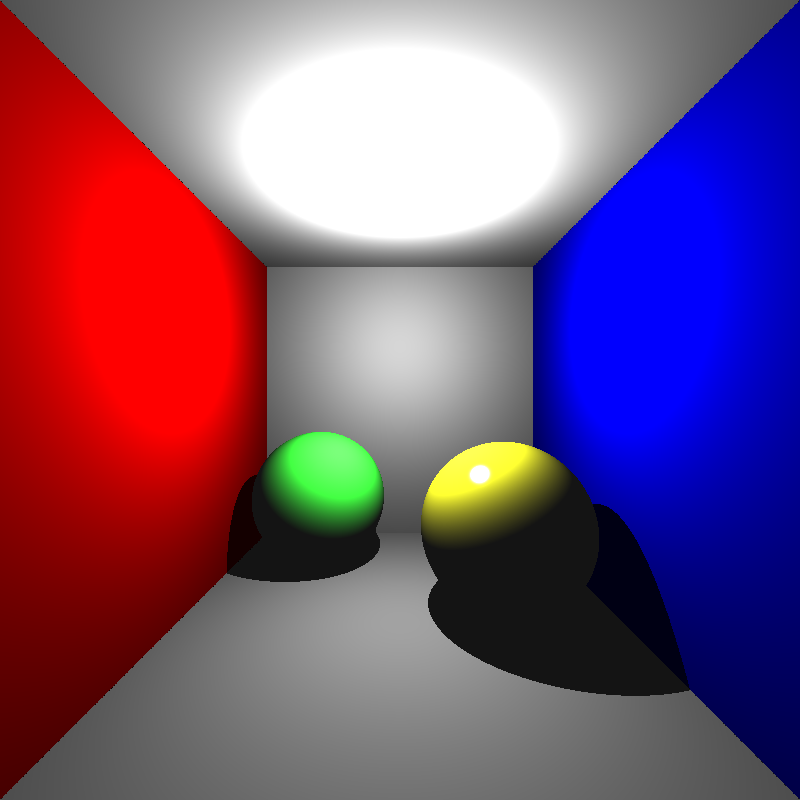

To give a bare bones explanation, ray tracing works by tracing a ray for each pixel of an image, checking for any objects intersected along that ray, and calculating the corresponding color based on the material and lighting. My ray tracer is just as bare bones as this explanation, it simply calculates a ray direction for each pixel, checks if any object is intersected, and computes the shading of the object at that point. Despite these simple steps, I made a lot of mistakes in my initial implementation. I’ll first show the results of some minor ones. The images below are all rendered from simple.json:

The image above is the correct rendering of simple.json. When I finally obtained the image above I thought that the base of my ray tracer was now complete. However, when I ran the ray tracer on other sample scenes, I got completely black images. This scene was the only one that was being rendered correctly. Debugging this problem took quite a bit of time.

My first thought was to check if there was something wrong with my intersection functions as no intersection occured at any pixel. I went over my implementations several times, I rewrote the triangle intersection function with matrix structs to make it more readable, I tested them with my own sample values. There was no issue with them.

My second thought was to check if there was something wrong with my calculations for the camera vectors and the image plane. I went over my gaze calculations for cameras with “lookAt” types, checked the corner calculations for the first pixel, yet nothing seemed to be wrong. Since the calculations for the image plane were correct the ray directions should have been correct by extension, or so I thought.

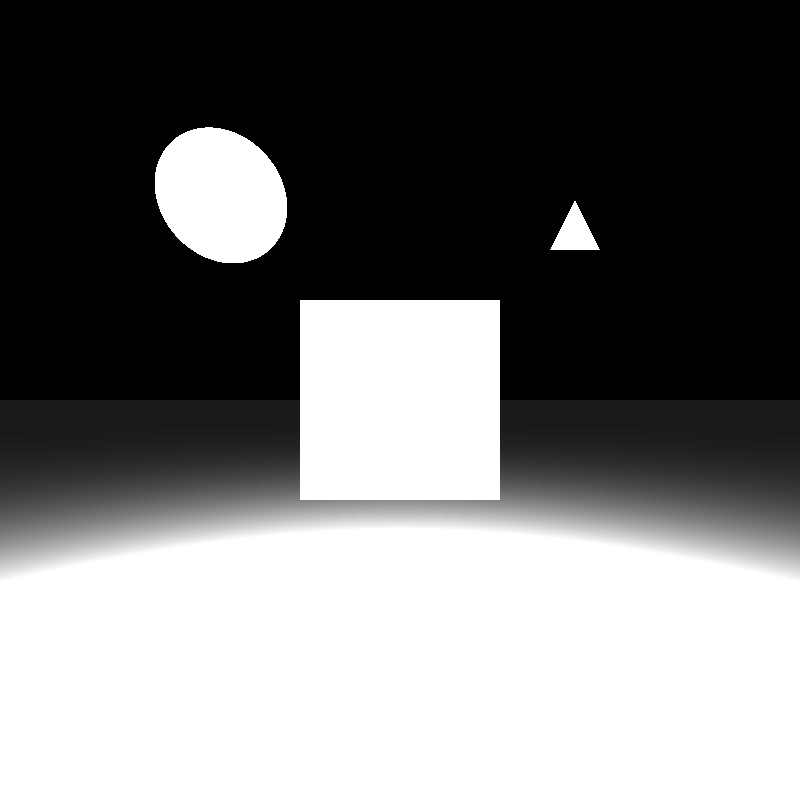

When I checked the ray directions individually while rendering, they turned out to be way off in some scenes. At this point I finally realised the issue: after calculating the pixel position I never subtracted the camera position from it, which gave me a position vector of the pixel instead of the ray direction vector. Since the camera of the simple.json scene was located at (0, 0, 0), the image was rendered correctly, while all the other scenes-which had cameras at different positions-appeared completely black. Fixing this mistake gave me the results below:

Reflection and Refraction Calculations

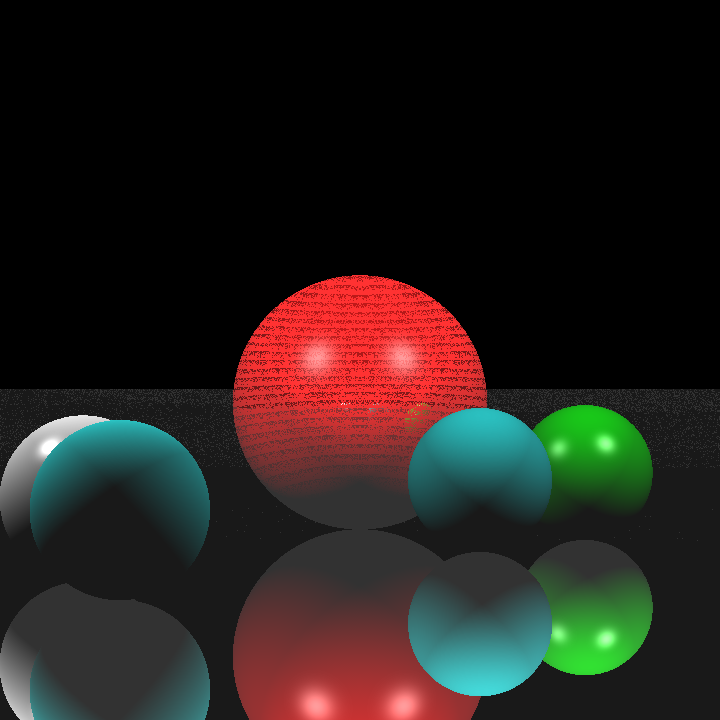

Next came the task of calculating the reflections and refractions for mirrors, conductors, and dielectrics. I initially just focused on implementing reflections for mirrors to make sure that I got the reflections working correctly first. The image below is rendered from spheres_mirror.json after I finished my initial mirror implementation:

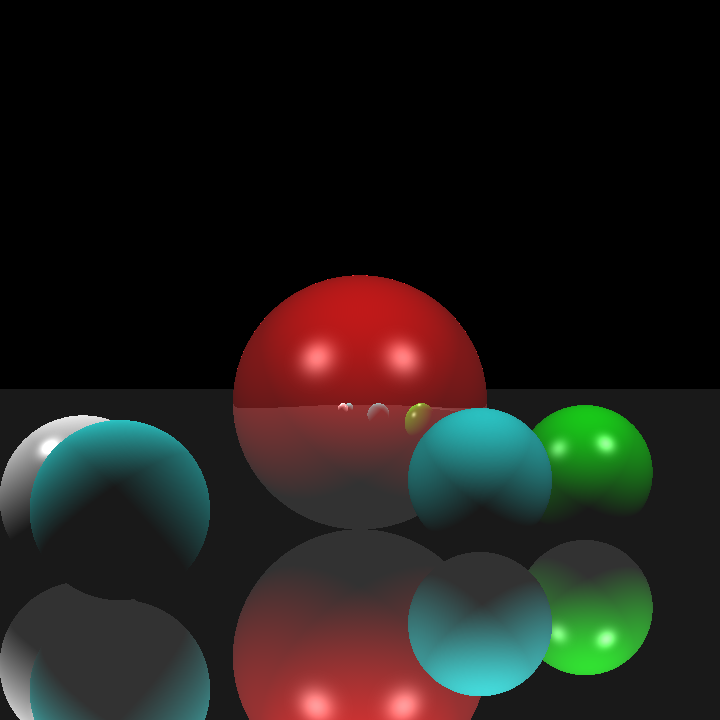

The result was a little off putting. The reflections of the center sphere even looked a little like a creepy, smiling clown. I believe the main reason for this was because I calculated the reflection rays incorrecty, but I wasn’t too sure as my initial implementation was quite messy. So I decided to redo the mirror implementation from scratch in a clearer way. My second mirror implementation gave the result below:

The result was a lot better than the first one, but there were still some noisy pixels scattered in certain areas. Tracking down the cause of this issue took a very long time, I almost decided to just move on and leave it as it was. I went over everything several times, but I couldn’t find anything wrong with my implementation. I redid the mirror implementation, but it still gave me the same result.

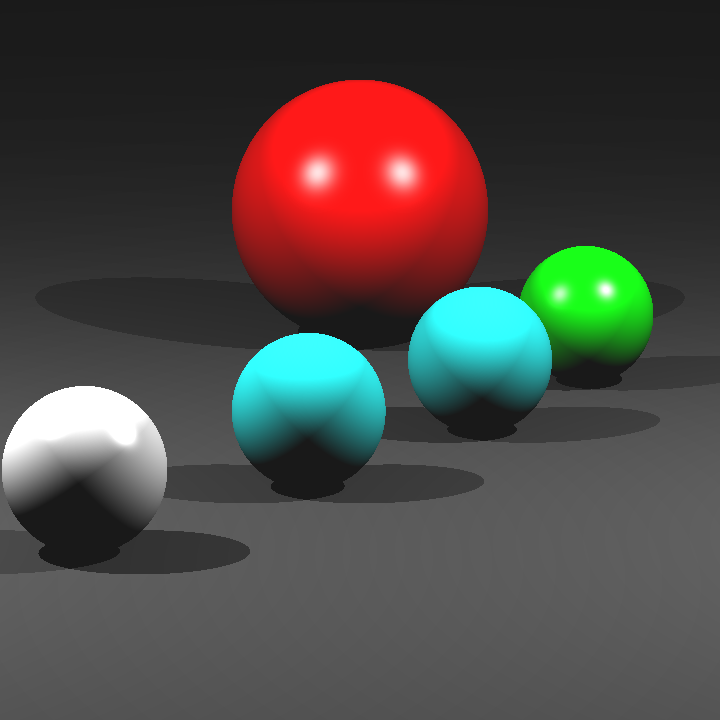

Eventually, I decided to tinker with the values inside the scene file. I tried changing almost every value inside the scene file without success until I finally decided to change the epsilon value. It turned out that the intersection_test_epsilon value-which was the value I used to offset the intersection point-was far too small and still caused self-intersection at certain points. When I used shadow_ray_epsilon instead, I got the result below:

At last, my mirror implementation seemed to be working correctly. After that, implementing Fresnel reflections for dielectrics and conductors didn’t take much time at all.

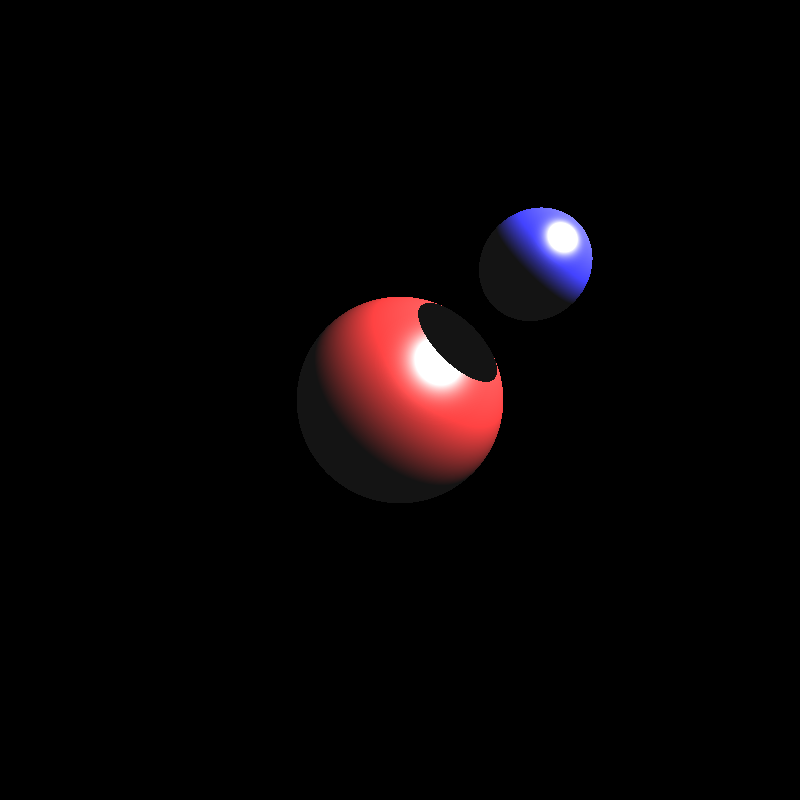

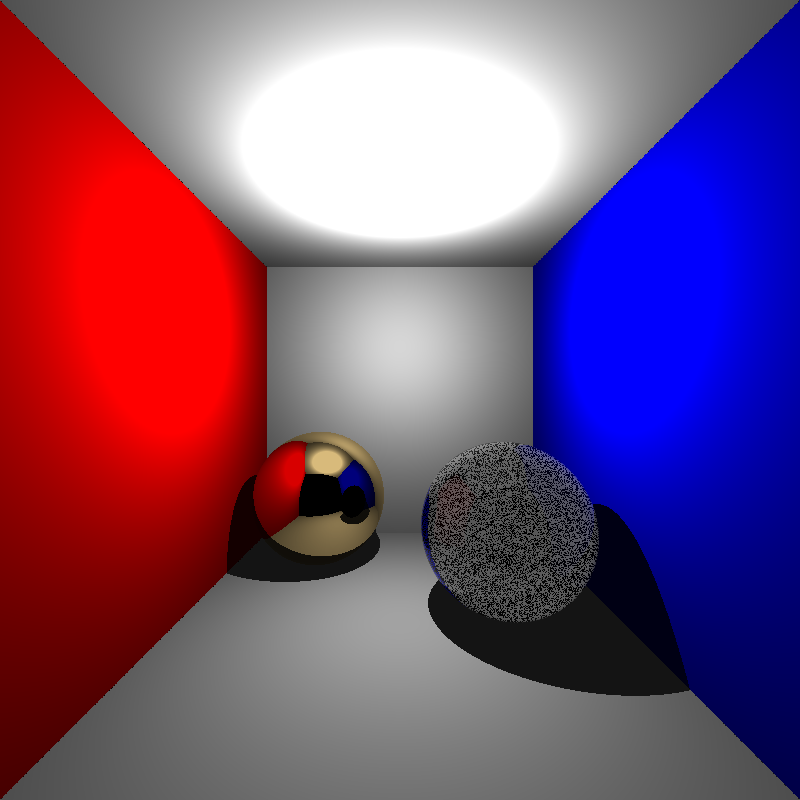

Now it was time for the final hurdle: calculating refractions. At this point I had very little time left so I decided to just implement a single refraction ray that did not bounce after leaving the object, yet even that proved challenging. Once again, the issue was noisy values at certain points but this time I could not come up with a solution. No matter how I changed the offset values or redid the refraction implementation, the result remained the same. Below is the rendering of cornellbox_recursive.json which contains a single conductor sphere to the left and a single dielectric sphere to the right:

Finally, here are the rendering times for various scenes:

| Scene | Render Time |

| simple.json | 1 second |

| spheres.json | 1.35 second |

| spheres_with_plane.json | 1 second |

| spheres_mirror.json | 1.36 second |

| two_spheres.json | 0.2 second |

| cornellbox.json | 3.9 seconds |

| cornellbox_recursive.json | 4.7 seconds |

| bunny.json | 312 seconds |

| chinese_dragon | >12hrs |

Despite a few issues along the way, I learned a lot through this assignment. The mistakes I made were simple but taught me valuable lessons about the fundamentals of ray tracing. I’m excited to keep improving my renderer and explore more advanced techniques in the future.