What is Bidirectional Encoder Representations from Transformers (BERT)?

Bidirectional Encoder Representations from Transformers (BERT) is published by Google AI Language in 2018, and it is seen as state-of-art in a broad range of NLP tasks by Machine Learning communities. The BERT Algorithm was created to understand queries better and provide accurate results to its users, like Google’s many other algorithm updates. It can reach highly accurate results in NLP tasks because of applying key technical innovations. One of them is that transformer, which is a prevalent model of attention to language modeling, employs bidirectional training in BERT. In comparison to earlier attempts, it has been applied to the opposite method of the early attempts, which looked at the text sequence as either left to right or combined left to right and right to left. One of the big differences between BERT and others is to look at the entire sentence to locate the missing word. Only two-way LSTMs have been used in the models we referred to before. Regardless of prepositions or conjuntos, these models tried to understand the data by recalling the information he had for a long time. Devlin and friends emphasize these model limitations as “The major limitations are that standard language models are unidirectional, and this limits the choice of architectures that can be used during pre-training. For example, in OpenAI GPT, the authors use a left-to-right architecture, where every token can only attend to previous tokens in the self-attention layers of the Transformer.” So, in contrast to other models, BERT uses a more complicated masked language model which attempts to understand each word’s relation to the other. Results from the paper show that a bidirectionally trained language model may have a more profound sense of context and flow than single-way language models. The researchers also describe in this document a novel approach, Masked LM (MLM), which offers bidirectional training that is impossible to implement in previous models.

BERT Algorithm with Examples

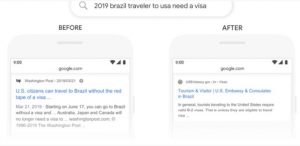

For instance, they were facing the Washington Post’s news before the BERT algorithm was updated when Brazilian tourists queried Google about the US visa. Since Google could not adequately interpret the user’s purpose here, it gave the user a result of that kind. However, the BERT algorithm resulted in a more precise understanding of the question, particularly with the ‘to’ link in the query. This page was presented directly to users of the embassy and consulate page.

In a different example, the user questions whether a medication can be obtained from the pharmacy and makes the following query on Google for someone else. As Google was not able to fully comprehend the purpose of the search, however, it provided the user with general recipe content. However, following the BERT algorithm, we see that he knows the query completely and gives the user content that answers their query directly.

Source

Devlin, J., Chang, M.-W., Lee, K. & Toutanova, K. (2018). BERT: Pre-training of Deep Bidirectional Transformers for Language