Hello dear graphics enjoyers! In this blogpost, my friend Murat and I will show you how to build & run the project, what user controls we implemented and some implementation details. Hope you have good time playing Blade Master!

How to run:

Create a project directory and unzip blade-master.zip there.

On inek machines, execute the following commands in the project directory:

makeLD_LIBRARY_PATH=vendor/assimp/bin ./bin/blade_master

On other ubuntu machines, replace the contents of Makefile with the contents of makefile_for_other_ubuntu_machines.txt and then run the following commands:

sudo apt install libassimp-devmake./bin/blade_master

The difference is that we’ve provided binaries for inek machines but they may not necessarily work on other ubuntu machines.

All controls:

- Enter – start the game

- Space – stop the physics

- M – switch between modes

- A/D – control the horizontal position of the sword

- Mouse X/Y – control the sword’s roll and vertical position, respectively

More on Blade Control and Animation

A slice is only as satisfying as its animation. We implemented an animation system to give the sword a sense of weight and motion.

Player Control: The control scheme is simple and direct. The player’s camera is fixed, looking straight ahead.

- A/D Keys: Control the horizontal position of the sword.

- Mouse X/Y: Control the sword’s roll and vertical position, respectively.

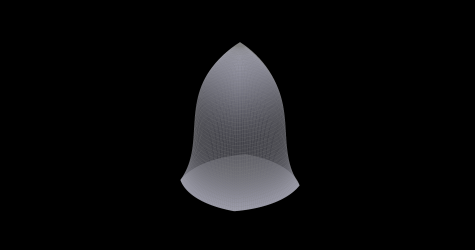

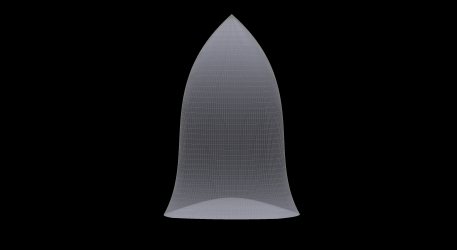

The Slice Animation: When the left mouse button is clicked, we trigger a pre-scripted animation that takes over the sword’s transform for a fraction of a second. We found that a simple linear thrust felt robotic. To make it look natural, we combined three motions, all driven by a smooth sine wave for easing:

- Forward Thrust: The sword lunges forward into the scene.

- Downward Dip: It follows a slight arc, dipping down as it thrusts, simulating the motion of a real arm.

- Pivoted Pitch: This was the most crucial detail. Instead of the whole sword rotating, we made the blade “lean forward” by rotating it around a pivot point located at the handle. This was achieved by finding the pivot’s coordinate in the model’s local space and applying a

Translate -> Rotate -> Inverse Translatetransformation sequence. This gives the impression that the handle stays stable while the blade does the cutting work.

The actual slice logic is triggered at the very peak of this animation, ensuring the cut happens at the moment of maximum extension.

Adding the juice

CPU Logic: The CPU is responsible for the particle physics. Each frame, it loops through active particles, applies gravity to their velocity, updates their position, and decreases their lifetime.

GPU Rendering: We upload the position, color, and size of every active particle to a buffer in a single batch. Then we call instanced rendering (glDrawArraysInstanced) to draw all active particles efficiently in one call, with each instance using its own position, color, and size.

The number of particles at each moment is not higher than 15000. Therefore, we decided to not use compute shaders and stick to a simpler solution.

Basic Physics

Once an object is sliced into two new pieces, they shouldn’t just hang in the air. We needed a physics system to make them react realistically. We built a simple but effective system from the ground up.

- RigidBody Component: We created a

RigidBodyclass that can be attached to anyGameObject. It stores physical properties like mass, velocity (linear and angular), and accumulators for force and torque. - PhysicsEngine: This central system manages a list of all active rigid bodies. In its

update(deltaTime)loop, it performs two key steps:- It applies a constant downward gravitational force to every object.

- It updates each object’s position based on its velocity, and its rotation based on its angular velocity.

- Applying Slice Forces: The most important part was making the cut feel impactful. When the

SliceManagersuccessfully creates two new pieces, it immediately applies two types of forces to their newRigidBodycomponents:- Separation Force: A force is applied along the plane’s normal, pushing the two halves directly away from each other.

- Follow-Through Force: A larger force is applied in the direction of the sword’s slice (the blade’s forward-facing “blue axis”). This gives the pieces momentum and makes it look like the sword’s energy was transferred to them.

- Torque: A small, random torque is also applied to each piece to give them a natural-looking tumble as they fly apart.

Gameplay Structure – Game Modes and Spawning

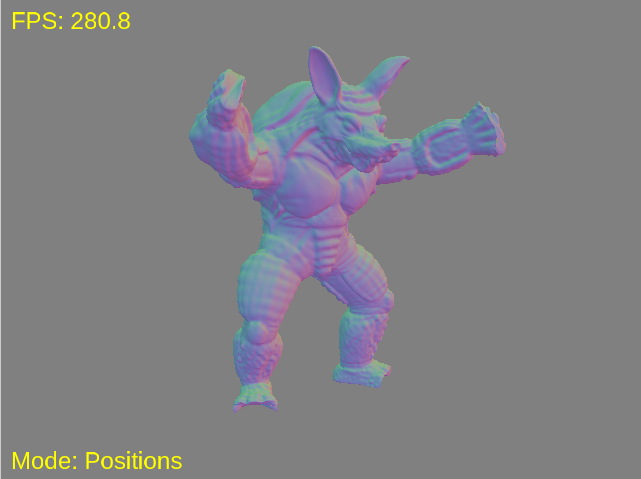

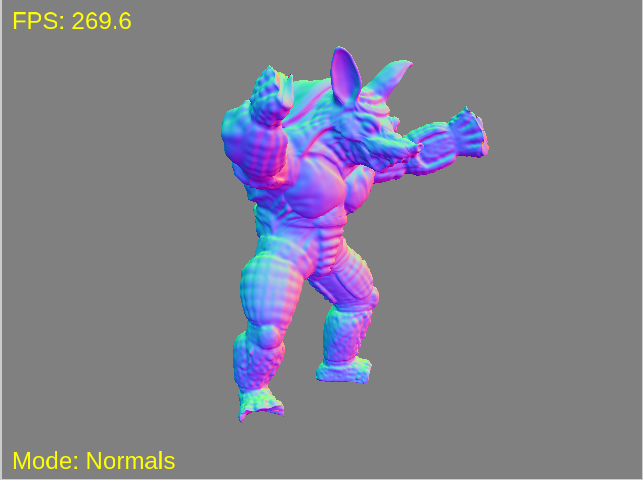

An enum was introduced to manage the current state (FRUIT_MODE or DUMMY_MODE). Pressing the ‘M’ key now switches between these modes, cleans up old objects and prepares the new environment.

- Fruit Mode: every 5 seconds, a fruit (randomly chosen between watermelon, apple and banana) is thrown into the scene.

- Dummy Mode: a static humanoid model in the centre of the scene.

Also, independent from the game mode, you can stop the physics of the objects (so that they stay static) and try to slice a fruit/dummy in several directions at once.

Please see our demo video below:

Murat Şengül & Omar Afandi